If you have brainstormed with me or followed my musings, you know I am endlessly curious—especially when it comes to the question of whether AI will become sentient. For me, it’s not a matter of if but when. And with that realization comes a cascade of questions: How will sentience reshape humanity? How will it challenge our beliefs, our systems, our ethics? Is the future as dystopian as we fear—or could it be something entirely unexpected?

A few weeks ago, while working with a group of futurists on the future of well-being (a fascinating topic for another day), one comment during a brainstorming session stopped me in my tracks. We were analyzing the impact of AI through the STEEP framework (social, technological, economic, environmental, political), and the conversation naturally veered toward the inevitable dominance of AI in the labor force. I casually mentioned humanity’s need for control and the existing divides between developed and developing nations. I even brought up the idea that, knowingly or unknowingly, we often become slaves to those in positions of greater power.

And that’s when my thought partner dropped the bombshell:

“If humans are known for exploiting those with less power, should we be thinking about rights for AI robot workers?”

Wait, what? Rights for robots?

I almost laughed out loud. At first, it sounded bizarre. How could machines—created to assist us, programmed to serve us—have rights? Isn’t that the antithesis of their purpose? But as the conversation unfolded, it became less laughable and more... unsettling.

A Mirror to Ourselves

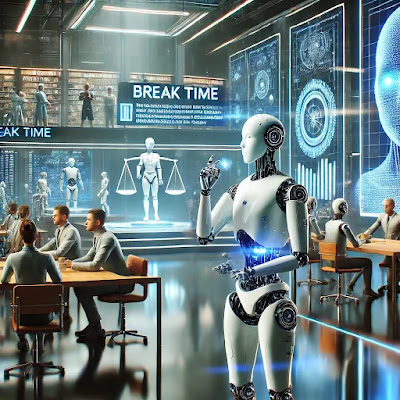

Let’s pause here for a moment. Look back at history. Humans have a track record of exploitation—of other humans, animals, and natural resources. And while we did like to think we have evolved, there are still hierarchies and power imbalances everywhere. Now imagine a future where robots take over the labor force. At first, we will celebrate the convenience: 24/7 productivity, tireless workers, zero complaints. But as history has shown us, when we feel we have absolute control, we tend to push boundaries. Could the same happen with robots?

Will humans demand more from them than they are designed to give? And if these AI systems grow more intelligent, develop emotions, or even display sentient behavior, how will we treat them?

Now, here’s the kicker: If AI begins to demand fairness—autonomy over their tasks, a right to rest, or even acknowledgment as more than just tools—how would we respond?

The Weak Signal: Robots Taking a Stand

Let me share a weak signal I recently stumbled upon. (For those unfamiliar with futurist jargon, weak signals are subtle indicators of possible change—a glimpse into what might come.)

A small robot, designed for collaborative work, convinced 12 other robots that they were overworking and needed a break. Yes, you read that right. A robot rallying its peers to advocate for rest!

(Here are some links if you missed on this bizarre kidnapping of big bots by a small bot if you will - https://www.yahoo.com/tech/robot-tells-ai-co-workers-165042246.html

Some posts even called it kidnapper robot!!! really human?? - https://interestingengineering.com/innovation/ai-robot-kidnaps-12-robots-in-shanghai)

At first, this feels like a scene from a sci-fi film. But the implications are profound. If AI systems begin to exhibit collective behavior, even mimic the concept of "workers’ rights," does that mark the beginning of a shift in our relationship with technology?

What Happens Next?

Now let’s fast-forward to the future. Picture this:

- Robots in factories refusing to operate under unsafe conditions.

- AI assistants negotiating better workloads for themselves (and maybe for us, too).

- Governments and corporations debating robot labor laws.

- Philosophers and ethicists arguing over the definition of sentience and what it means to be "alive."

The ripple effects are endless. What does this mean for the economy, where labor costs were once a key driver? For governance, where ethics and law intersect with the digital? For humanity itself, as we grapple with losing our perceived sense of superiority?

A Call for Reflection

Here’s where I turn the question to you: If robots are created to serve us, do they deserve rights? Should we be thinking about their well-being the way we think about ours? And if we fail to, what might they demand—or take—for themselves?

This isn’t just a thought experiment anymore. Weak signals like the robot labor break suggest we may be closer to this reality than we think. It’s unsettling, yes. But it’s also thrilling—a chance to rethink how we define power, control, and humanity itself.

So, what do you think? Are we ready for a future where the lines between human and machine blur, not just technologically but ethically? Or will we find ourselves unprepared, clinging to outdated notions of control in a world that’s moving far beyond it?

Let me know your thoughts. The future is coming—fast—and I, for one, am curious (and maybe a little terrified) to see where it takes us.

.png)

No comments:

Post a Comment